Orit Shaer, Class of 1966 Associate Professor of Computer Science, received two grants from NSF in support of collaborative research in the Wellesley HCI Lab:

UbiqOmics: HCI for augmenting our world with pervasive personal and environmental omic data, is a continuation of our previous grant on HCI for personal genomics. This is a collaborative grant with Oded Nov (NYU) and Mad Ball (Open Humans). The project will focus on human-computer interaction for UbiqOmic environments: living spaces and social interactions where omic data is available about people, plants, animals, and surfaces. Recent years have seen a sharp increase in the availability of personal and environmental ‘omic’ data (e.g. data about genomes or microbiomes) to non-experts. In particular, the team will identify user needs and develop web-based visual tools that integrate omic data sets from heterogeneous resources and multiple samples. These tools will allow users to aggregate, explore, relate, and connect pervasive omic information, and facilitate collaborative sense making of omic information within various social contexts including families and cohabiting communities. In addition, the project will harness the power of augmented reality (AR) to visualize the invisible, designing, developing, and evaluating an AR interface which overlays timely and actionable omic data in the environment and on the user’s own body (oral, gut, skin). Jennifer Otiono ’18 joined the HCI Lab as a post-bac research fellow on this project.

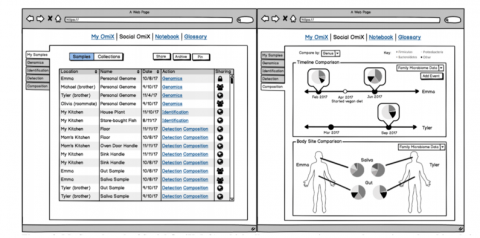

A design for a Social OmiX app, which allows users to view, sort, share, pin, and archive their omic- samples and the samples of others that have been shared with them.

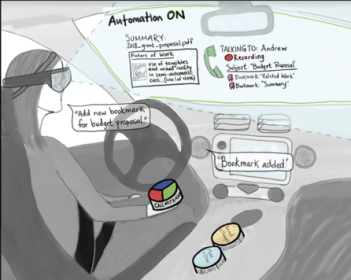

The second grant is a new large collaborative project funded by the NSF program on the Future of Work, titled The Next Mobile Office: Safe and Productive Work in Automated Vehicles. This is a collaboration with Andrew Kun (University of New Hampshire), Raffaela Sadun (Harvard Business School), Linda Boyle (University of Washington), John Lee (University of Wisconsin). The project focuses on understanding how technology can allow commuters in highly automated (but not yet fully autonomous) cars to safely combine or switch between work and driving tasks. We will develop a new multi-interface in-vehicle environment for the support of work-related tasks, as well as safe driving, in automated vehicles and will test in driving simulators and real vehicles. We believe that the innovative contributions to the in-vehicle use of speech and spoken interactions, augmented reality, and tangible user interfaces will have applicability to a broad range of settings, including for non-drivers and in mobile environments beyond the car. The project also includes activities to promote the participation of women in Science, Technology, Engineering and Mathematics. Diana Tosca ’18 joined this project as a post-bac research fellow.

Leave a Reply