On 3 October 2018, Suresh Venkatasubramanian, of University of Utah, gave a colloquium talk on The Computational and Ethical Ramifications of Automated Decision-Making in Society.

Author: Editor (Page 2 of 9)

Over the summer in 2018, A shley DeFlumere, former Lecturer in Computer Science at Wellesley, moved on to Ireland, where she has joined Intel Labs as a research scientist. Ashley is missed around the department at Wellesley, where she taught CS 230 Data Structures, CS 240 Foundations of Computer Systems, and a course on her research area: Scientific and Parallel Computing, not to mention helping to anchor the department’s extracurricular activities. Behind the scenes, she also led the foundation and development of peer mentoring and tutor training programs which have become valuable additions in CS courses. Best wishes!

shley DeFlumere, former Lecturer in Computer Science at Wellesley, moved on to Ireland, where she has joined Intel Labs as a research scientist. Ashley is missed around the department at Wellesley, where she taught CS 230 Data Structures, CS 240 Foundations of Computer Systems, and a course on her research area: Scientific and Parallel Computing, not to mention helping to anchor the department’s extracurricular activities. Behind the scenes, she also led the foundation and development of peer mentoring and tutor training programs which have become valuable additions in CS courses. Best wishes!

Emma Lurie ’19 presented Investigating the Effects of Google’s Search Engine Result Page in Evaluating the Credibility of Online News Sources, a paper coauthored with Eni Mustafaraj, in May at the ACM WebSci 2018 conference in Amsterdam.

As a result of the discussion of this paper, a challenge to improve Wikipedia was created. Students in the Wellesley Cred Lab, led by Mustafaraj, are preparing Wikipedia edit-a-thons to involve Wellesley students in the challenge, and will be responsible for measuring the page creation and author participation impacts of the full challenge.

Orit Shaer, Class of 1966 Associate Professor of Computer Science, received two grants from NSF in support of collaborative research in the Wellesley HCI Lab:

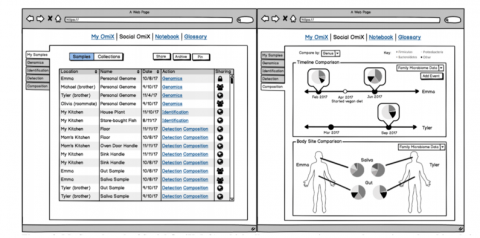

UbiqOmics: HCI for augmenting our world with pervasive personal and environmental omic data, is a continuation of our previous grant on HCI for personal genomics. This is a collaborative grant with Oded Nov (NYU) and Mad Ball (Open Humans). The project will focus on human-computer interaction for UbiqOmic environments: living spaces and social interactions where omic data is available about people, plants, animals, and surfaces. Recent years have seen a sharp increase in the availability of personal and environmental ‘omic’ data (e.g. data about genomes or microbiomes) to non-experts. In particular, the team will identify user needs and develop web-based visual tools that integrate omic data sets from heterogeneous resources and multiple samples. These tools will allow users to aggregate, explore, relate, and connect pervasive omic information, and facilitate collaborative sense making of omic information within various social contexts including families and cohabiting communities. In addition, the project will harness the power of augmented reality (AR) to visualize the invisible, designing, developing, and evaluating an AR interface which overlays timely and actionable omic data in the environment and on the user’s own body (oral, gut, skin). Jennifer Otiono ’18 joined the HCI Lab as a post-bac research fellow on this project.

A design for a Social OmiX app, which allows users to view, sort, share, pin, and archive their omic- samples and the samples of others that have been shared with them.

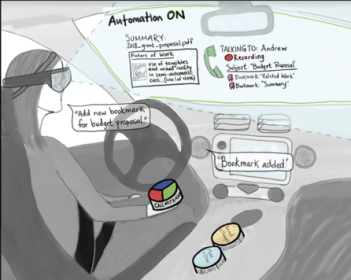

The second grant is a new large collaborative project funded by the NSF program on the Future of Work, titled The Next Mobile Office: Safe and Productive Work in Automated Vehicles. This is a collaboration with Andrew Kun (University of New Hampshire), Raffaela Sadun (Harvard Business School), Linda Boyle (University of Washington), John Lee (University of Wisconsin). The project focuses on understanding how technology can allow commuters in highly automated (but not yet fully autonomous) cars to safely combine or switch between work and driving tasks. We will develop a new multi-interface in-vehicle environment for the support of work-related tasks, as well as safe driving, in automated vehicles and will test in driving simulators and real vehicles. We believe that the innovative contributions to the in-vehicle use of speech and spoken interactions, augmented reality, and tangible user interfaces will have applicability to a broad range of settings, including for non-drivers and in mobile environments beyond the car. The project also includes activities to promote the participation of women in Science, Technology, Engineering and Mathematics. Diana Tosca ’18 joined this project as a post-bac research fellow.

At the 31st Florida Artificial Intelligence Research Society Conference (FLAIRS), sponsored by AAAI:

Eni Mustafaraj presented Task-specific Language Modeling for Selecting Peer-written Explanations, a paper coauthored with Khonzoda Umraova ’20, Lyn Turbak, and Sohie Lee, on a CS 111 assignment that asks students to explain bugs and the debugging process and deploys artificial intelligence to assist in distinguishing strong or weak explanations.

At the conference, Khonzoda Umarova ’20 also presented a poster on Recognizing and Exemplifying Gender Bias in Online Articles, based on research work with Mustafaraj.

CS Majors from the Class of 2018 presented their thesis work:

Eliza McNair ’18

adVantage – Seeing the Universe: How Virtual Reality can Further Augment a Three-Dimensional Model of a Star-Planet-Satellite System for Educational Gain in Undergraduate Astronomy Education

This thesis introduces the “adVantage – Seeing the Universe” system, a learning environment designed to augment introductory undergraduate astronomy education. The goal of the adVantage project is to show how an immersive virtual reality (VR) environment can be used effectively to model the relative sizes and distances between objects in space. To this end, adVantage leverages the benefits of three-dimensional models by letting users observe and interact with astronomical phenomena from multiple perspectives. The system uses pre-set vantage points to structure students’ progress through a variety of “missions” designed to improve their understanding of scale. The adVantage system departs from two-dimensional, textbook illustrations by adding navigable depths to a star-planet-satellite system, and distinguishes itself from existing pedagogical 3D space-simulation environments (that we know of) by establishing a laboratory for student investigation. Students exploring in adVantage will be able to observe the relative sizes and orbital movements of the subjects of the system: e.g., the exoplanet WASP-12b, its Sun-like star, WASP-12, and imagined satellites constructed to resemble the Earth and its Moon. This combination of astronomical bodies will engage students by introducing the new star-exoplanet system and provide context by incorporating familiar elements. We have already implemented a JavaScript prototype of the adVantage system and are developing the VR system using the game engine Unity and the VR system SteamVR. Students will interact with adVantage using a HTC Vive headset and hand controllers. We will carry out preliminary investigations of student response to the system when the immersive version of adVantage is complete.

This thesis introduces the “adVantage – Seeing the Universe” system, a learning environment designed to augment introductory undergraduate astronomy education. The goal of the adVantage project is to show how an immersive virtual reality (VR) environment can be used effectively to model the relative sizes and distances between objects in space. To this end, adVantage leverages the benefits of three-dimensional models by letting users observe and interact with astronomical phenomena from multiple perspectives. The system uses pre-set vantage points to structure students’ progress through a variety of “missions” designed to improve their understanding of scale. The adVantage system departs from two-dimensional, textbook illustrations by adding navigable depths to a star-planet-satellite system, and distinguishes itself from existing pedagogical 3D space-simulation environments (that we know of) by establishing a laboratory for student investigation. Students exploring in adVantage will be able to observe the relative sizes and orbital movements of the subjects of the system: e.g., the exoplanet WASP-12b, its Sun-like star, WASP-12, and imagined satellites constructed to resemble the Earth and its Moon. This combination of astronomical bodies will engage students by introducing the new star-exoplanet system and provide context by incorporating familiar elements. We have already implemented a JavaScript prototype of the adVantage system and are developing the VR system using the game engine Unity and the VR system SteamVR. Students will interact with adVantage using a HTC Vive headset and hand controllers. We will carry out preliminary investigations of student response to the system when the immersive version of adVantage is complete.

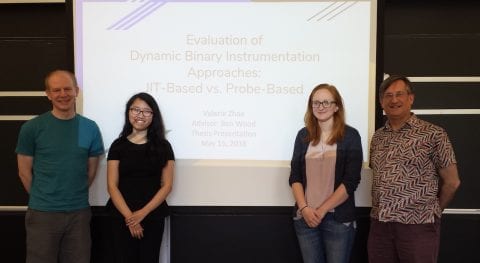

Valerie Zhao ’18

Evaluation of Dynamic Binary Instrumentation Approaches: Dynamic Binary Translation vs. Dynamic Probe Injection

From web browsing to bank transactions, to data analysis and robot automation, just about any task necessitates or benefits from the use of software. Ensuring a piece of software to be effective requires profiling the program’s behavior to evaluate its performance, debugging the program to fix incorrect behaviors, and examining the program to detect security flaws. These tasks are made possible by instrumentation—the method of inserting code into a program to collect data about its behavior. Dynamic binary instrumentation (DBI) enables programmers to understand and reason about program behavior by inserting code into a binary during run time to collect relevant data, and is more flexible than static or source-code instrumentation, but incurs run-time overhead. This thesis attempts to extend the preexisting characterization of the tradeoffs between dynamic binary translation (DBT) and dynamic probe injection (DPI), two popular DBI approaches, using Pin and LiteInst as sample frameworks. It also describes extensions to the LiteInst framework that enable it to instrument function boundaries more effectively. Evaluations found that LiteInst performed up to 80× slower than the original binary run time, while Pin had at most 10× slow-down, suggesting that DBT is more efficient in some aspects.

From web browsing to bank transactions, to data analysis and robot automation, just about any task necessitates or benefits from the use of software. Ensuring a piece of software to be effective requires profiling the program’s behavior to evaluate its performance, debugging the program to fix incorrect behaviors, and examining the program to detect security flaws. These tasks are made possible by instrumentation—the method of inserting code into a program to collect data about its behavior. Dynamic binary instrumentation (DBI) enables programmers to understand and reason about program behavior by inserting code into a binary during run time to collect relevant data, and is more flexible than static or source-code instrumentation, but incurs run-time overhead. This thesis attempts to extend the preexisting characterization of the tradeoffs between dynamic binary translation (DBT) and dynamic probe injection (DPI), two popular DBI approaches, using Pin and LiteInst as sample frameworks. It also describes extensions to the LiteInst framework that enable it to instrument function boundaries more effectively. Evaluations found that LiteInst performed up to 80× slower than the original binary run time, while Pin had at most 10× slow-down, suggesting that DBT is more efficient in some aspects.

Maja Svanberg ’18

Suggested Blocks: Using Neural Networks To Aid Novice Programmers In App Inventor

MIT App Inventor is a programming environment in which users build Android applications by connecting blocks together. Because its main audience is beginner programmers, it is important that users are given the proper guidance and instruction to successfully become creators. In order to offer this help, App Inventor provides text-based tutorials that describe the workflow of example programs to users. However, studies have shown that out-of-context help such as tutorials has little to no effect on learning, and when given the choice, users prefer in-context hints and suggestions. In order for users to overcome some of the barriers with self-training, we need to provide them with relevant information and in-context suggestions. Therefore, I am introducing Suggested Blocks, a data-driven model that leverages machine learning to provide users with relevant suggestions of which blocks to include in their programs.

MIT App Inventor is a programming environment in which users build Android applications by connecting blocks together. Because its main audience is beginner programmers, it is important that users are given the proper guidance and instruction to successfully become creators. In order to offer this help, App Inventor provides text-based tutorials that describe the workflow of example programs to users. However, studies have shown that out-of-context help such as tutorials has little to no effect on learning, and when given the choice, users prefer in-context hints and suggestions. In order for users to overcome some of the barriers with self-training, we need to provide them with relevant information and in-context suggestions. Therefore, I am introducing Suggested Blocks, a data-driven model that leverages machine learning to provide users with relevant suggestions of which blocks to include in their programs.

In this project, I focused on developing the neural networks to power a suggested blocks system. Using original apps from real App Inventor users, I developed a set of experiments to discover plausible vector representations of the data, including tree traversals, n-grams, tree structures, as well as different network architectures to generate the best possible block suggestions for the users. The objective is not only to be accurate, but to provide suggestions that are sensible, relevant, and most importantly, educational. When simulating the best model on reconstructing an original project from a novice user, suggesting only 10 blocks at a time, the user would be able to drag- and-drop 60% of her blocks straight form the Suggested Blocks drawer. Overall, the results show promise for a future implementation of a Suggested Blocks system.

Lip Sync veterans Jean Herbst and Eni Mustafaraj returned to the 2018 SCI Faculty Lip Sync with new stars Cibele Freire and Scott Anderson, introducing their latest hit, New Rules, a collaboration with Do a Loopa. See how many CS references you can pick up in their subtle lyric engineering and moves!

Assistant Professor of Computer Science Eni Mustafaraj was awarded the highly competitive National Science Foundation CAREER grant for early-career faculty in support of her work on “Signals for Evaluating the Credibility of Web Sources and Advancing Web Literacy.” The 5-year $460,610 grant will support Eni’s work with several student researchers under her leadership in the Wellesley Cred Lab.

Eni’s project will identify and implement signals about online sources that will help users assess their credibility (should you believe what this source is writing about climate science or gender equality?) These signals can be used to augment search results, for example in Google. A concrete example in one of Eni’s blog posts uses the metaphor of “nutrition labels” to explain this augmentation.