I am very happy that the digitization of Browning Love letters has received all the attention that it deserves. Perfect timing and excellent collaboration with Baylor University is making it possible for a large audience to get access to the letters that otherwise required the scholars to visit our Special Collections. I am also thrilled that we have been able to finalize the schedule for the “Liberal Arts Learning in the Digital Age”. I strongly encourage everyone on campus to participate. After all, we are discussing the future of the Library and Technology on campus and we want the community input on these matters.

The “flattened world” resulting from the internet and the web has clear advantages, but has also brought with it the ability to expose the weaknesses in software technologies. The reason is that the advances in the technologies driving the internet are moving at a pace much faster than software development tools. And it is extremely hard to keep up with. In other words, software developers develop expertise by spending a lot of time learning the tools of the trade in a select few “systems” – be it programming languages, or development platforms. The agility required to move from one to the next in a few months or years is a daunting task. And every time you do it, you are basically starting over. When you start over, you are likely to make mistakes – or introduce “bugs”.

The “flattened world” resulting from the internet and the web has clear advantages, but has also brought with it the ability to expose the weaknesses in software technologies. The reason is that the advances in the technologies driving the internet are moving at a pace much faster than software development tools. And it is extremely hard to keep up with. In other words, software developers develop expertise by spending a lot of time learning the tools of the trade in a select few “systems” – be it programming languages, or development platforms. The agility required to move from one to the next in a few months or years is a daunting task. And every time you do it, you are basically starting over. When you start over, you are likely to make mistakes – or introduce “bugs”.

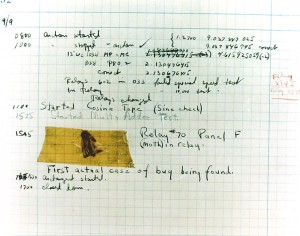

The term “bug” is traced back to a failure of an early computing machine called Mark II (in 1947) due to a bug that got trapped in an electronic relay. This was carefully saved in a logbook. The image on the left is from Wikimedia Commons (http://en.wikipedia.org/wiki/File:H96566k.jpg) and the actual logbook is preserved in the National Museum of American History. The term “debugging” therefore refers to the act of locating and removing such bugs. However, like everything else in life, the attribution of the term debugging to the story about the bug remains unresolved – apparently it was used by aeronautical engineers in 1945.

When I was doing my masters degree in computer science, I had some of the terrific teachers. Some of them had extensive knowledge beyond just the textbooks because they had worked in the industry, a very young on in the mid 80s. It was either a course in Formal Languages or Analysis of Algorithms where we were studying how to prove the correctness of algorithms. At least at that time proving the correctness of an algorithm that was more than a few lines was impossible. So, we were trained to use counter examples to show why an algorithm is incorrect.

It is next to impossible to write software that is “formally” correct. The reason is the definition of “correctness” is not a clear one when you design complex systems. Writing an algorithm to sort real numbers has a clear definition and algorithms can be developed and most importantly tested to make sure that the results are accurate. Of course, the algorithms have to make sure that the inputs are verified to be real numbers and nothing else, that they are provided using the decimal system (and not greek!). One should then take variations of inputs and verify that the output works in each and every case. Algorithm being accurate and working is one thing, but perception is another. Of course the perception matters a lot. In this case, algorithm may be accurate, but written inefficiently. So a user may have to wait a long time to sort a million numbers and give up blaming the software or that they started a long sort process that aborted either because there was not enough memory or that the scheduled downtime for a server killed the process half way through. Even in a “well-defined” case like this, algorithms need to worry about perceptions – they need to be fast, anticipate issues (if it requires a lot of memory, assess it early and let the user know that there is not enough memory, and let the user know that “you are starting this too close to a scheduled down time, so you may be in trouble”). Easier said than done!

There have been some really fascinating stories about “incorrect” codes. One that I will always remember is the one that is quoted about a minor error in a Fortran program that cost NASA a lot of money. The rumor has it that this caused Mariner 1 to crash. However, this has been dismissed. Still, it highlights the ease with which these can happen. Basically, Fortran uses something called a lookahead operator to determine what kind of statement it is parsing after removing all the blanks (blanks are there for readability in Fortran!). For example Do 230 I=1,3000 is a statement that tells that repeat everything between this line and a line with the statement number 230 3000 times. However, one small mistake Do 230 I=1.3000 will have a devastating result. Fortran relies on the comma to decide whether this is a do loop or not. In the latter case, it does not see the comma, and since all blanks are removed, the statement reads Do230I=1.3000, which is a perfectly valid Fortran statement that assigns a variable called Do230I the decimal value of 1.3000. Instead of a loop, you have none. There are many other interesting and hard to detect bugs like this, some of which have come to bother me because I have been caught doing them.

No real life example is as simple as a sort. One can imagine how complex they can be. It is rarely the case that a software developer knows how the software is going to be used. The original intent of it rarely matters. You can certainly say (and many vendors do this to the annoyance of many users) “We developed this software for this purpose and the user manual clearly states how to use it and any variations thereof, we are not responsible”. But we all know it does not work that way. There is such a large language gap between the producers and the buyers to start with. Therefore the buyers acquire these software “thinking” that it will do something that they need based on the information that the seller gave. And then they find out that it really doesn’t do what the buyer thought it will do.

This is why the actual writing of a program is only a small part of software development. Testing and refining is a huge part of it. And testing cannot be done simply by the person who is writing the code – by definitions coders think they are always right, so having them test will not yield anything useful. Subject matter experts and usability testers play important roles. A coder may have written the software expecting that the users will listen to the instruction not to hit the browser’s back button (because the code does not account for it!). The usability expert will come and say – no, everyone uses the back button, so fix your code. Or that the subject matter expert who requested the software will often ask the programmer to change the language used.

It is highly cyclical and unfortunately never finished! In big organization where they have a process and rules, these work differently – when a project manager is authorized to move the project forward, S/he can tell the programmer that “the usability expert is correct, so please apply the changes and deliver the next version for testing in a week”, it gets done. In small and friendly organizations like Higher Ed IT, it is very different. This process gets very personal sometimes, staff gets stressed over things and sometimes personal relationships fall through the cracks. Sometimes, the functional users who ask for software do not understand their responsibilities clearly, especially the time it takes to test. They think that the programmer knows it all and they are also responsible for all testing. When things don’t work, fingerpointing starts – “you were supposed to check this out!”, “you never told me that I had to do all the checks”, which adds a whole new layer of stress and inefficiency to the process.

This is why it is extremely important to “manage” these projects – establish clear roles and responsibilities up front, get buy in from some senior folks so that if the time commitment from the functional offices is not being met, you have a place to go and ask for help, be realistic about what can be delivered and when, stick to consistency and don’t get drawn into too much personalizations and “coolness” (because each of this is costly on all counts), make it clear that software are not perfect and if it is within our control, we promise to attend to any problems as soon as we can and correct them – rather than promising to deliver something that never will fail, etc.

Finally, something that I am repeating umpteenth time – think reusable code and frameworks. Why? Because once verified, they work. Also, you just need to make changes in one place. Pour all your creative juices in developing these frameworks rather than in individual software and systems you may develop.

Now, on to discovering and removing that next bug!